Controlling QPS in a Fan-Out Microservice Architecture

Shabbir Goher

Feb 14, 20254 min read

In this blog, we will explore how TAS has implemented a robust QPS control mechanism within a fan-out microservice architecture. This architecture enables a single ad request to trigger multiple API calls to external ad demand sources. Effective QPS management in such a setup is critical for maintaining platform stability, optimizing ad delivery, and maximizing the potential of each demand partner without overwhelming their infrastructure.

With a user base of over 400 million, Truecaller generates billions of ad opportunities daily. The Truecaller AdServer (TAS) integrates with multiple demand partners, each bringing unique capabilities. While some are market leaders managing billions of requests daily, others have strict QPS (Queries Per Second) constraints. Calling all demand partners simultaneously could overwhelm the less scalable ones, leading to inefficiencies.

Adding to this complexity, TAS experiences traffic fluctuations throughout the day, reaching peaks of over 600k QPS. Therefore, it is essential to enforce QPS limits to prevent overloading demand partners during high-traffic periods.

The Challenge of Fan-Out Architectures

Imagine a scenario where your service, potentially running on auto-scaling infrastructure, needs to fetch data from multiple third-party APIs to fulfill a single user request. While powerful, this "fan-out" pattern can quickly lead to a surge in outgoing requests, especially under heavy load.

Here's what we need to consider:

- Unbounded Incoming Traffic: Our service should be capable of handling a potentially unlimited number of incoming requests.

- Third-Party API Limits: Each external API we interact with will have its own QPS limitations, which we must respect.

- Dynamic QPS Limits: These limits might change over time, requiring flexibility in our solution.

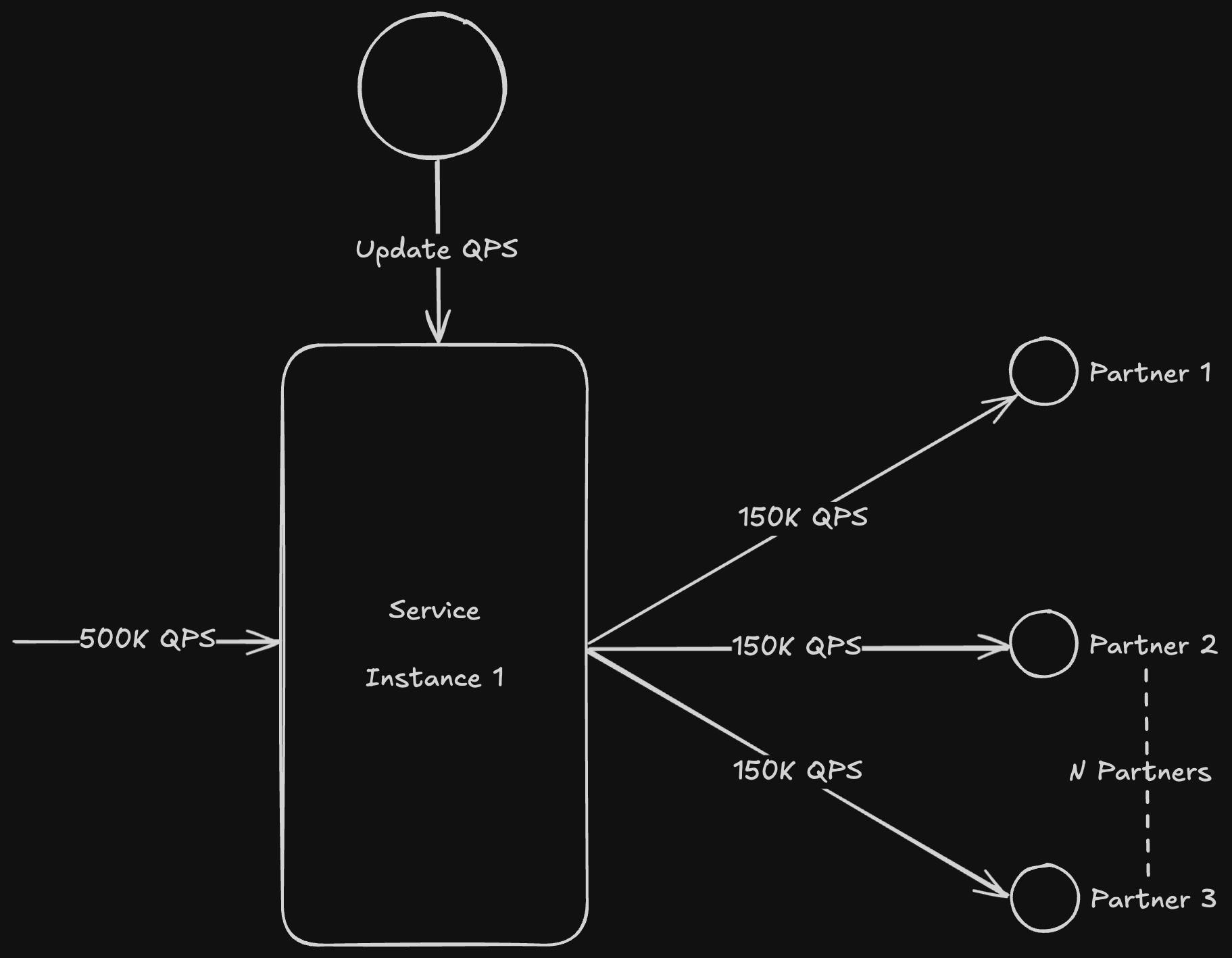

Single Instance Solution: The Token Bucket Algorithm

Let's start by assuming our service runs on a single, powerful instance. A popular and effective approach to QPS control is the Token Bucket algorithm.

Here's how it works:

- Configuration: We configure the RateLimiter for each external API with:

• limitForPeriod: The maximum allowed QPS (e.g., 150 QPS).

• limitRefreshPeriod: How often the bucket is replenished with new tokens (e.g., every 1 second).

• timeoutDuration: Maximum wait time for a request to acquire a token (set to 0 for immediate rejection if no token is available). - Token Acquisition: Before making a request to an external API, our service attempts to acquire a token from the corresponding RateLimiter.

- Request Execution/Rejection:

• If a token is available, the request proceeds.

• If no token is available (the bucket is empty), the request is immediately rejected or handled gracefully.

This single-instance solution is effective but requires a sufficiently powerful machine. However, with TAS handling billions of requests and peaking at around 600k QPS, a single machine is insufficient to manage such high traffic volumes.

Scaling Out: Challenges and Solutions

Distributing our service across multiple instances introduces the need for coordination. While a centralized cache like Redis can store shared RateLimiters, it introduces additional network calls and latency.

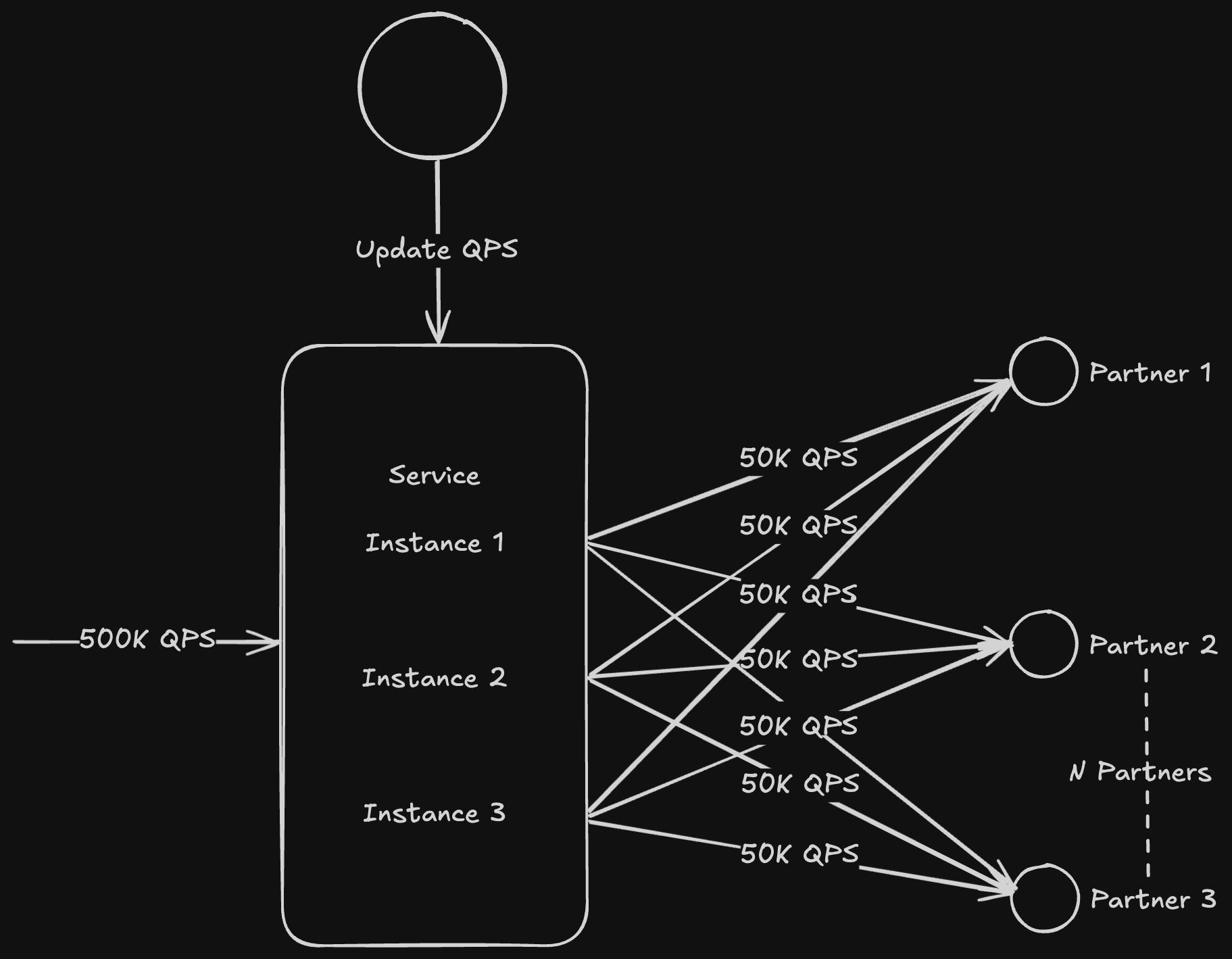

A More Efficient Approach

We leveraged the predictability of our traffic and pre-determine the number of instances (N) required. Then, we can adjust the limitForPeriod of each RateLimiter to QPS/N, effectively distributing the allowed QPS across the instances

For instance, if our service runs on 3 instances and a partner allows a maximum of 150K QPS, we can allocate 50K QPS to each instance (150K/3).

This solution works well under consistent traffic conditions. However, in real-world scenarios, traffic varies between peak and off-peak hours. Configuring service instances for peak traffic would inefficiently use valuable computing resources during off-peak times. To address this, we aim to implement an autoscaling system that dynamically adjusts resources based on traffic fluctuations.

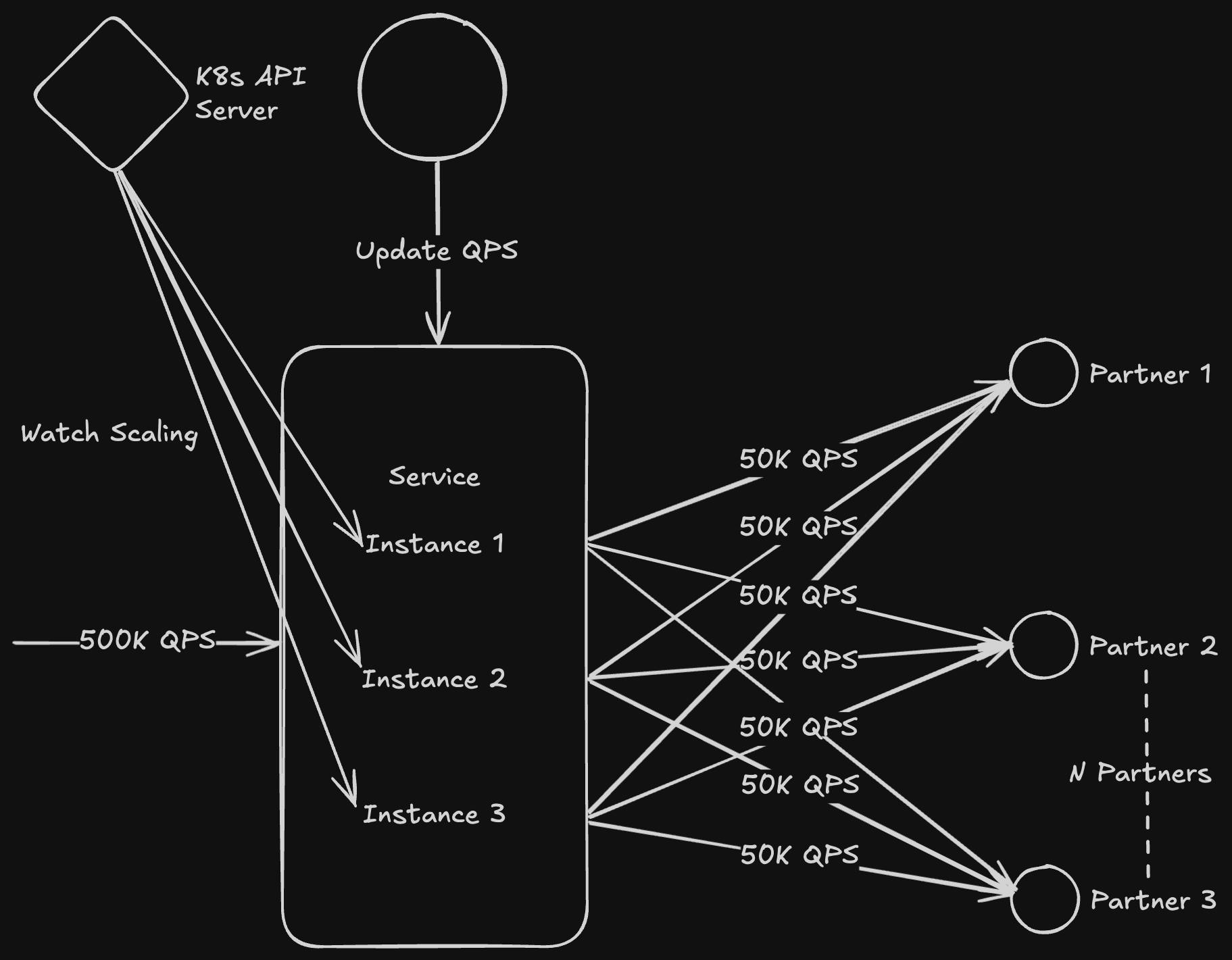

Auto-Scaling with Kubernetes

Imagine the service from the previous section autoscaling from 3 to 4 instances based on load. In such a scenario, each instance would need to be notified of the updated QPS allocation. With the additional instance, the allowed QPS per instance would decrease from 50K to 37.5K (i.e., 150K ÷ 4).

We achieve this using Kubernetes Horizontal Pod Autoscaling (HPA), which dynamically adjusts the number of instances based on the current load. By leveraging Kubernetes Watch to monitor autoscaling events in real-time, our service can seamlessly track and respond to changes in the number of active instances.

The Kubernetes Watch API provides instant notifications about pod (instance) updates, enabling our service to dynamically recalibrate the limitForPeriod of its RateLimiters. This ensures consistent QPS control and optimal resource utilization, even during fluctuating traffic patterns.

Even though pods might receive different amounts of incoming traffic due to load balancer behavior, this variation does not affect the overall QPS limit we have set up. Each pod enforces its own rate limit based on the allocated QPS, ensuring that the total outgoing traffic remains controlled.

What’s Next?

Controlling QPS is vital in microservice architectures, especially in fan-out scenarios. By combining the Token Bucket algorithm with instance-aware configuration and Kubernetes' autoscaling capabilities, we can build a robust and adaptable solution that ensures reliable integration with external APIs while maximizing efficiency and respecting service limits.

Here you can find some references you can use to get started:

Shabbir Goher

Feb 14, 20254 min read